Energy efficiency in cooling is not about the cost of kilowatt*hours consumed.

Ever since data centers began to consume a significant share of electricity in the global balance sheet, the topic of energy efficiency has not been off the pages of the industry press.

But maybe it's important for large and very large data centers that consume tens or hundreds of megawatts? And for the owner of a small server room, those costs are small versus other costs?

In fact, there are aspects of energy efficiency that have a noticeable impact on the economics of even a small facility. And not just through electric bills.

For a small facility, the cost of capital investment per kilowatt of IT load is noticeably higher than for a large facility. The "economies of scale" come into play. In addition, financing terms for small facilities are usually not as favorable as for large projects. So for a small data center, the main costs will be the cost of purchasing the equipment, depreciation and interest on invested funds: even if the facility is built using the owner's funds without borrowing money, in a fair economy calculation we must take into account the interest on invested funds to reflect the difference with the option, when the money was just put in the bank or invested in bonds.

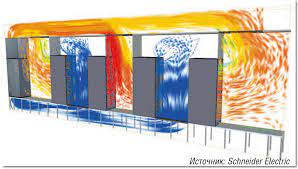

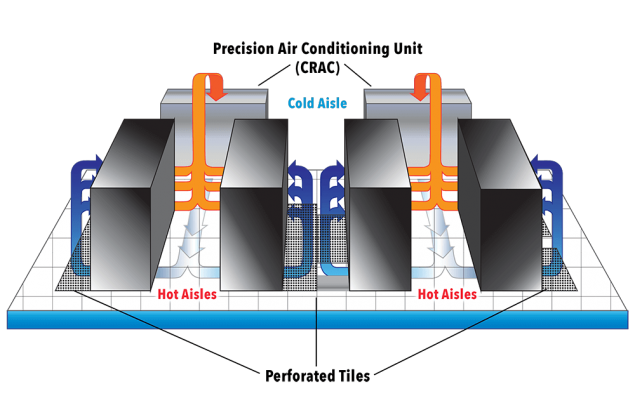

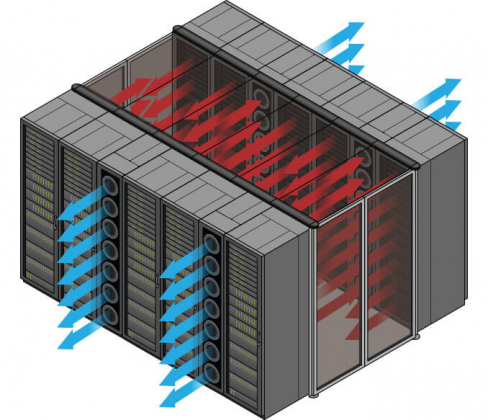

So what happens if, for example, the server room does not have good airflow separation? Ideally, all the warm air from the servers enters the precision air conditioner, and all the cold air produced by the air conditioner goes to cool the servers. This achieves the maximum temperature difference between the warm and cold air streams and a unit of airflow blows the maximum amount of heat off the equipment. It is not for nothing that modern large data centers practice the physical separation of hot and cold corridors with the help of hard partitions with airtight doors.

If in the server room half of the hot air blown by servers "in a short circle" goes back to the input grilles of the IT equipment, and a large part of the cold air from the air conditioner returns to its input bypassing the IT equipment, then the cooling system capacity is used inefficiently, the air conditioner cools "itself".

In order not to overheat the servers we have to lower the temperature at the outlet of the air conditioner. Otherwise, after mixing with a part of hot air, the flow actually getting into the server will be too warm. It is not hot air after the server (modern powerful servers can have more than 40 degrees on the output) that enters the air conditioner, but barely warm air mixed with cold air. The temperature difference decreases, and more airflow is required to carry the same heat. In order to pump this greater flow, more energy is needed for both the air conditioner fans and the server fans, and this energy is also converted into additional heat.

As a result, the meters show a much lower ratio between the capacity of the IT equipment and the cooling system consumption than was intended in the design. As a result, it's not just the "meter winds up". The performance of the cooling system and the DGSs that feed it will be exhausted at a much lower IT load than calculated. In the server room will be able to work much less servers than calculated. That is, the actual costs per unit of IT load will be much (probably several times) higher than it should be. In the worst case, plans for business expansion or service development will be thwarted. And it happens that errors in the organization of air flow is very difficult to quickly correct, for example, if the cross section of the space under the raised floor was too small.

Avoiding the above risks helps to ensure the correct layout of the room, taking into account the technological intricacies of the process of operation and the experience gained in the industry. Such a conceptual design should be carried out at the earliest stage of creating or renovating a server room.